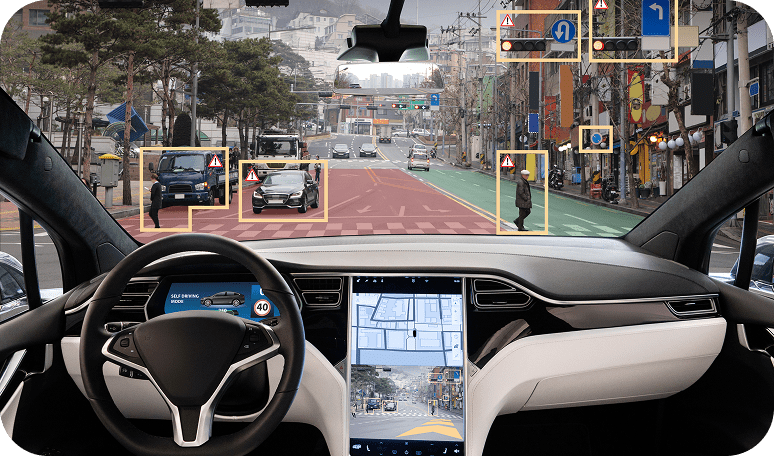

ADAS (Advanced Driver Assistance Systems)

We Enable:

• Real-time perception (object detection, lane keeping, pedestrian tracking)• Sensor fusion (camera + LiDAR + radar)

• Adaptive cruise control and Driver monitoring systems (DMS)

Why SiMa:

Low-latency response, energy-efficient ML pipelines, and support for diverse sensor inputs

Autonomous Driving (L2+ to L5)

We Enable:

• Environmental perception and path planning and Real-time decision-making• Low-power inference across multiple neural networks

• Fail-safe perception for redundancy layers

Why SiMa:

Deterministic performance, tight control over model pipeline execution, and hardware/software co-optimization

In-Cabin AI & UX

We Enable:

• Occupant detection & classification• Gesture recognition, voice control, Emotion/intent detection

• Personalized infotainment systems

Why SiMa:

Enables smarter human-machine interfaces with high model accuracy at minimal power draw

Predictive Maintenance/Smart Diagnostics

We Enable:

• Detect abnormal sounds/vibrations via ML• AI-based error code prediction and prioritization

• Real-time system monitoring and alerts

Why SiMa:

Efficient ML inference pipelines allow continuous diagnostics with minimal overhead

Driver & Vehicle Safety

Lane Keep Assist

Pedestrian & Cyclist Detection

Forward Collision Warning

Blind Spot Monitoring

Driver Drowsiness Detection

Parking Assistance

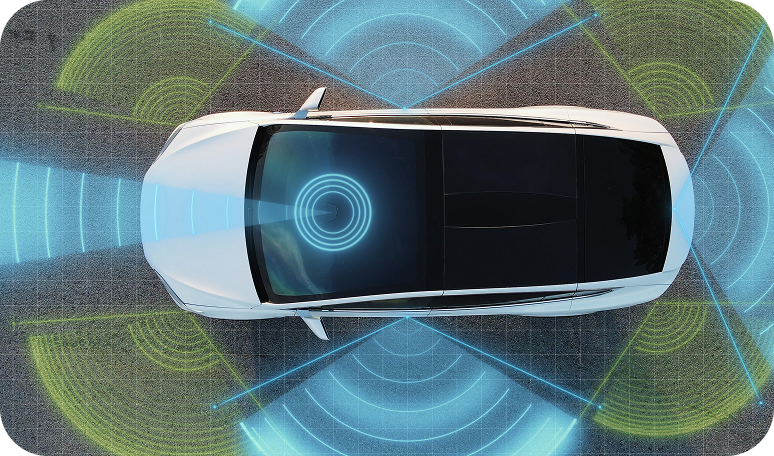

Autonomous Driving/Perception

Multi-modal Sensor Fusion (Camera + LiDAR + Radar)

Real-time Object Detection & Tracking

Traffic Sign & Signal Recognition

Road Edge and Lane Line Detection

Path Planning and Obstacle Avoidance

Low-latency Inference for Redundant Safety Systems

In-Cabin Monitoring

Child Presence, Seatbelt Detection

Gesture Recognition HMI Control

Face Recognition Personalization

Edge Voice Command Processing

Autonomous Vehicles

Multiple models, camera and LIDAR integration

SiMa.ai Solution Detail

Products

- Hardware: MLSoC and MLSoC Modalix

- Software: Palette and Palette Edgematic

Services

- Develop perception and object detection stack

- Enable customer pipeline on SiMa.ai chip

- Deploy across multiple platforms

Solution Overview

- Multimodal compute enabling perception, mapping, localization, and navigation

- Industry leading frames-per-second performance

- Compute at the edge, enabling low latency

Challenges

- Multimodal low latency compute at the edge

- Sensor flexibility

- Low power profile for mobility platform

Solution

- Simultaneous Localization and Mapping (SLAM)

- 16 channel LIDAR input at 10 FPS

- Multi-camera input (6) – object detection at 30 FPS

Takeaways

- State of the art mobility platform that is safe

- Cost effective scaling at the edge

“AI is transforming robotics, and at Virya, we’re harnessing its potential to deliver real value. Our partnership with SiMa.ai brings powerful edge-AI capabilities for real-time, low-power decision-making—enabling smarter, faster, and scalable solutions. We’re proud to be among the first in India’s autonomous mobility space to integrate their platform.”

Saba Gurusubramanian

CTO, Virya Autonomous Technologies Pvt Ltd